Will AI take my job? Part 1

Most people who are working now or will enter the workforce in the future are looking over their shoulders, wondering when the next wave of AI related changes will crash on their shores.

Not a day goes by without headlines popping up on how AI will replace humans in the workplace.

Example:

NYT: This A.I. Company Wants to Take Your Job by Kevin Roose https://www.nytimes.com/2025/06/11/technology/ai-mechanize-jobs.html

The potential of AI to fundamentally transform the nature of work is one of the reasons why I started Veering Forward. Most people who are working now or will enter the workforce in the future are looking over their shoulders, wondering when the next wave of AI related changes will crash on their shores.

I want to share my personal thoughts on this topic over the coming weeks.

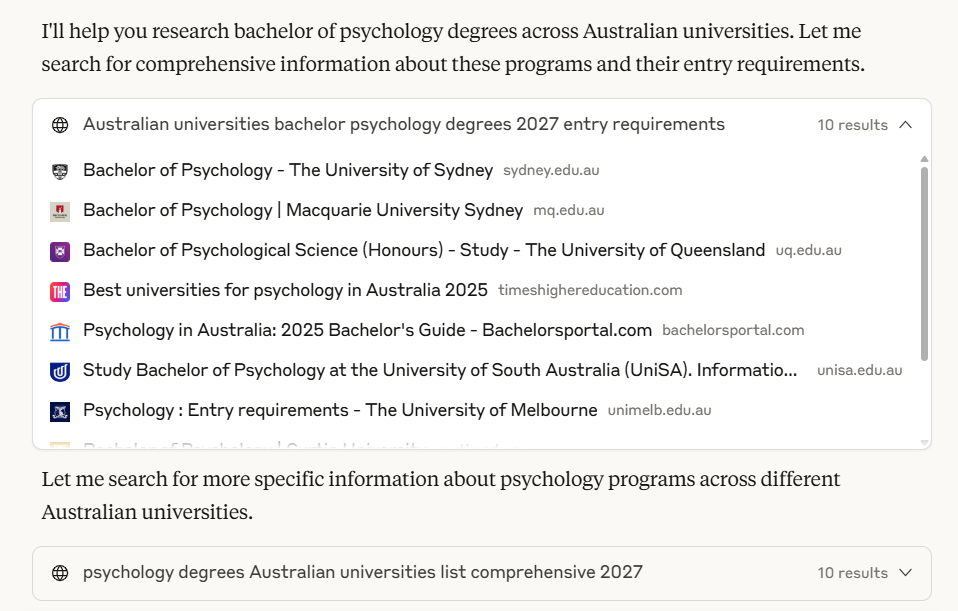

A few weeks ago, I asked Claude Sonnet 4.o to conduct the following research.

I am a high school student looking to study bachelor of psychology in Australia in 2027. Search from websites of all Australian universities and identify all bachelor of psychology degrees, provide a summary of each degree, and provide details of their entry offer schemes for the degree if they have one. Include links to the university websites in your response so I can double check the information provided

It started searching for information from different university websites:

And provided me with a list of seven universities.

It didn't give me information on early offer schemes, so I followed up with the following:

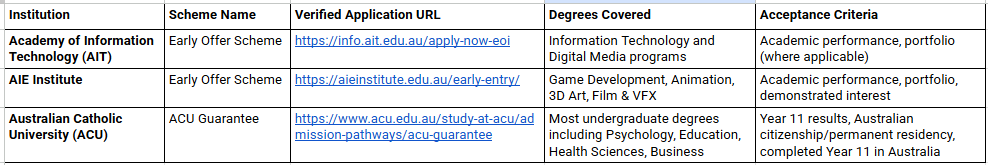

Read all data on this website https://www.uac.edu.au/current-applicants/undergraduate-applications-and-offers/early-offer-schemes-for-year-12-students and create a table of all institutions listed and their URL links under the how to apply section

After a few additional prompts (I asked Claude to check all the URLs as some on the UAC website were broken), I have a table with the information I sought.

I haven't checked the veracity of the data Claude returned, but even if it hallucinated some of it, I now have a much easier task to check.

Below is the table I shared in last week's post, with my answers for the task above in the last column.

| Should you start a task with AI? | 🧠 | 🤖 | 🎓 |

|---|---|---|---|

| 1. Do you enjoy the task? | Yes | No | No |

| 2. Are you good at the task? | Yes | No | OK |

| 3. How long will it take to complete the task without AI? | Fast | A long time | A long time |

| 4. How good is AI in executing the task in one go? | Poor | Excellent | Good |

| 5. What is the stake? | High | Low | Low-medium |

Based on Q1-4, using AI to start the task is a no-brainer (pardon the pun).

Q5 is an interesting one. My answer is low-medium because this is personal research, and I plan to visit at least some of the websites for further information anyway, so what Claude provided me is a useful starting point.

However, if it's my job to compile the information for a company or a school, the stake will be much higher. I will cross-check every answer Claude provided and update the table to remove errors and make the information more insightful (e.g. what does "demonstrated interest" mean from AIE Institute's acceptance criteria?). Using Claude to create the table still saves me time, but I'm not going to put it in a report or publish it without substantial review and editing.

This is not an isolated example. From (1) my experience with AI projects at Day Job, (2) my personal use of LLMs, and (3) talking to others and observing how AI tools are being used in companies and industries - the technology can achieve a lot, and it can complete certain tasks, but in most instances we still need a human-in-the-loop.

And it's not just because AI can make up information out of thin air.

There was a lot of fanfare last week when OpenAI released its ChatGPT Agent. The pitch is that it can control your computer and complete tasks on your behalf with a prompt. A journalist at The Verge tried it to buy flowers for a friend. This is their conclusion - I recommend reading the whole article to see the experience for yourself.

ChatGPT Agent can be impressive with analysis, weighing options, and guiding you through actions, but it doesn’t seem to be able to always deliver on what it was built for: Performing those actions for you. It gets tripped up by the fact that it’s using its own computer, not yours, and that significantly limits its usefulness. Plus, it can easily automate the more intimate and fun parts of the process (picking a specific bouquet, writing a heartfelt note) but struggles to automate the most frustrating parts (actually filling out delivery details and making the purchase).

What does it all mean?

In some industries, AI is already transformational.

As an example, in accounting and law, a lot of work that junior staff do - reading financial statements, writing first drafts and other "grunt work", can now be completed by LLMs and other AI tools for mid level and senior staff to review. There are anecdotal evidence that graduate hire in accounting and law firms are being scaled back.

However, even in those industries, we're not yet at a point where we can rely on AI to replace human completely. Limitations of current technology, risk of errors and biases, privacy concerns, and regulatory guardrails are some of the reasons why we still need humans involved.

And - this is an area that I personally want to research on - today's AI technology is not yet operating meaningfully at the top of the funnel, i.e. innovate. Yes, it can research, analyse, write and execute (to a degree), but humans are still identifying the problems to be solved and coming up with new ways to solve them.

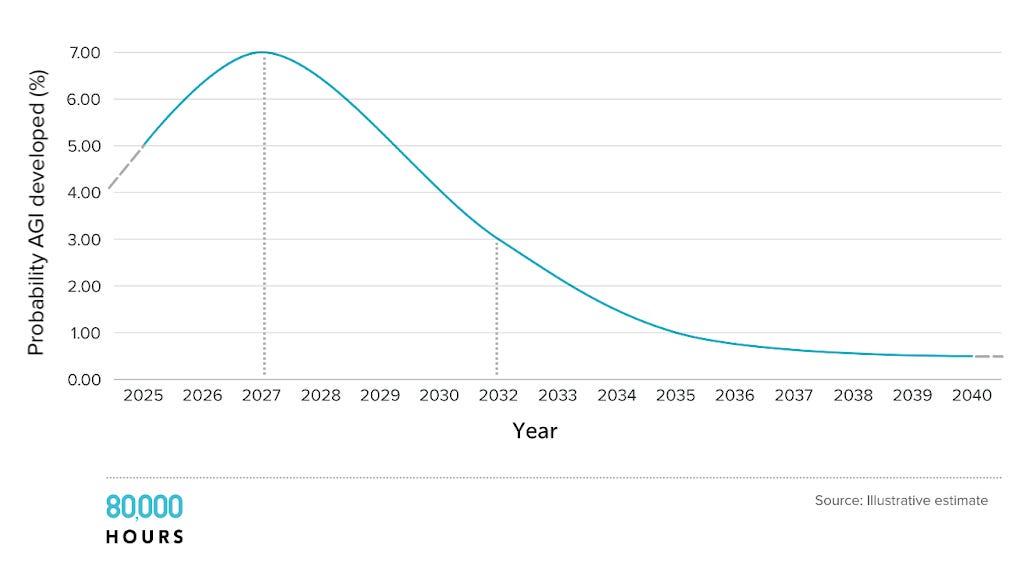

The caveat, of course, is that this is the case for now. New LLMs and AI models will arrive at the scene and likely wow us again. The capabilities of ChatGPT Agents and other AIs tool will improve. And the "holy grail" - Artificial General Intelligence (AGI) may just be around the corner.

(Dwarkesh Patel doesn't think so yet - it's worth reading his thoughts on why.)

Why I don’t think AGI is right around the corner by Dwarkesh Patel https://www.dwarkesh.com/p/timelines-june-2025

What will that future look like if/when AGI is here? Your guess is as good as mine.

For now, someone who understands and harnesses what AI can do, and excels in what AI can't do well, is more likely to take your job than AI itself.

Why don't you ...

Think about your current job, or a job that you want in the future, and see which parts may be meaningfully done by an LLM or other AI tools, and which parts are harder for AI to take over.

I hope this post offers you some food for thought.

Until next time!

Vee